vSAN 7.0: Ready for Modern Applications and more

With the new version of vSphere (also see https://my-sddc.net/?p=833), we can also look forward to a new version of vSAN. And with vSphere, this also brings along a lot of new and improved functionality.

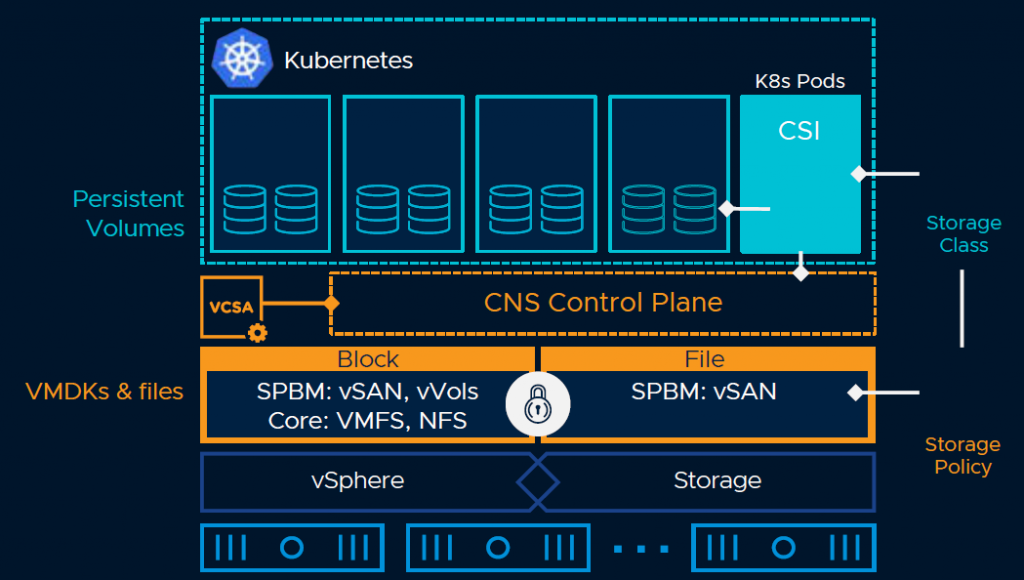

Most importantly, vSAN is aligned with the other VMware portfolio to support the Modern Applications. With vSAN we now can use “Cloud Native Storage” (CNS), to natively support Kubernetes. The architecture around this looks like:

Through this CNS it is possible to treat storage used by modern applications as so-called first-class-citizens. Offer persistent volumes, create policies around them, do snapshots and use volume-based encryption.

Other Enhancements

But that is not all, far from it even. With vSAN 7.0 a lot of new functionality and improvements are introduced.

Native File Services

Yes, finally vSAN offers file services directly from vSAN, but, unfortunately, it is (in the first iteration) just NFS. It supports both NFS v4.1 and v3. NFS can be used for Cloud-Native as well as for traditional workloads on vSAN. As said, currently SMB is not supported.

It is also not meant to replace large filers that organizations currently use for NFS-based storage, nor as a means to connect non-vSAN vSphere clusters (this is not supported, same as with iSCSI on vSAN).

Stretched/2 Node improvements

A lot of improvements have been made around stretched clusters and 2-node direct-connect clusters.

With vSAN 7.0 it is possible to override the default gateway for the vSAN VMK, which makes it a lot easier to connect over layer-3 networks. In the previous versions, it was necessary to manually add routes to the hypervisor in order to have connectivity, but with vSAN 7.0, you can change the default gateway on the interface. It doesn’t get it’s own stack like vMotion does, but this makes connecting vSAN over layer-3 a lot easier. By the way, this can also be used for (larger) “normal” clusters, for instance when using multiple racks and ToR switches that have layer-3 connectivity to the rest of the network.

Stretched Cluster recovery enhancements

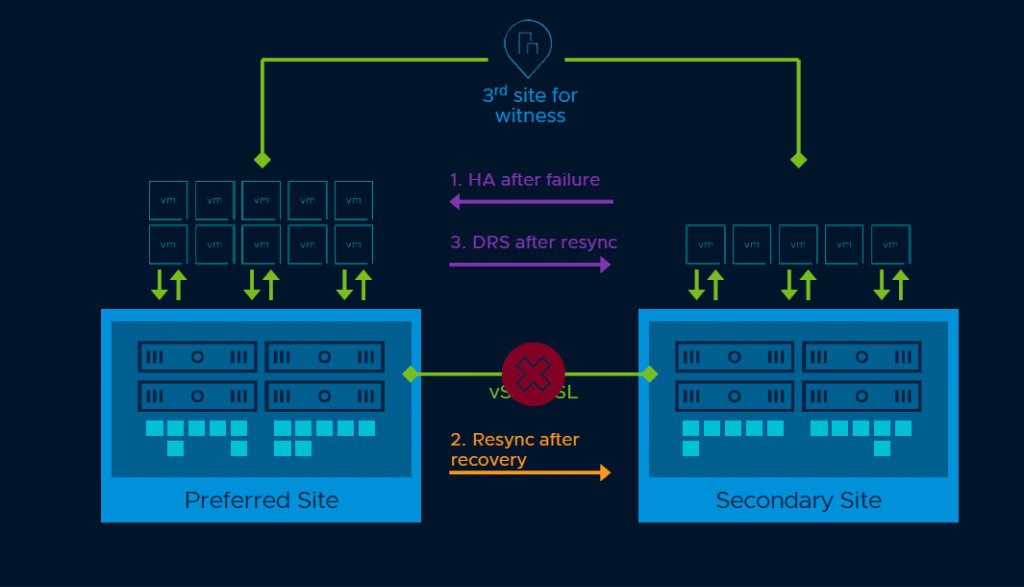

After a failure of and recovery a site in a stretched cluster, vSAN will have to update the changed components by copying the changes to the recovered location. However, DRS also wants to migrate virtual machines to their preferred locations, based on the configured rules.

This poses two problems. First of all, the bandwidth used for this migration can influence the time it takes for the resync (and thus reprotect) to complete and secondly, it means that if the virtual machine is migrated before the storage resync is completed, the virtual machine will get its storage from the remote site.

To address this, DRS will be aware of resync activities and will wait for resync to complete before moving virtual machines back to the recovered site.

After such an event, virtual machines bound to one site (through a DRS-must rule), will use their storage from the remote site. In this situation, recovery I/O sent across the Inter-Site-Link is reduced to improve virtual machine performance.

Resilience with Stretched Cluster and 2-Node Topologies

When the witness appliance needs to be replaced, the workflow will now immediately repair objects on that witness to regain compliance as soon as possible, instead of waiting for the time-out to be reached.

Memory usage for vSAN

Currently, it is not very easy to get insight into the vSAN process’s memory usage. In the new version, it is possible to get this information from either the GUI or through API usage. Also, the usage over time can be seen, in order to see what the effect of certain changes to the environment is.

Insight into vSphere Replication data

With vSAN 7, it is now possible to get insight into the data used for vSphere Replication including the total amount of storage used for these objects. Very useful in my opinion from a planning and sizing perspective.

Support for hot-plug NVMe devices

When an NVMe device has a hardware failure, that currently means evacuating the host, shutting it down, replace the device and start it again. Because of the support for hot-plug for NVMe devices (for both vSphere and vSAN), it is a lot easier to replace these devices in the case of an error. It is however only supported for selected OEM platforms.

Support for thin-provisioned shared disks

In the current version, it is necessary to use eager zeroed shared disks, for instance when using Oracle RAC clustering. This is pretty inefficient since it means provisioning all storage beforehand. This is done by creating a policy where Object Space Reservation (OSR) is set to 100.

With vSAN 7, it is now possible to use thin provisioned (OSR=0) disks when doing Oracle RAC and other shared virtual disks, when using Multi-Write Flags (MWF).