Holodeck LAB: Upgrading to VCF9 (on unsupported hardware)

VCF9 is here and I want to look at it. That said, I have some older hardware, so I am not sure if I will be able to run VCF9 on it. But let’s explore.

Disclaimer: This is unsupported, and there is a reason for that. So don’t do this in production (of course, a nested environment would also not be used in production, but you get my drift).

- Install vCenter Server

- Upgrade physical host

- Upgrade SDDC Manager

- Upgrade Management Domain

- (Install VCF Operations)

Install vCenter Server and Upgrade physical host

First step is to do an upgrade of the host itself. This is fairly easy. I first had to deploy a new vCenter Server (version 9), since I run my vCenter Server within VMware Workstation and there is no easy way to do an upgrade in such an environment. So after installing the new vCenter Server, I moved my hosts to this vCenter server.

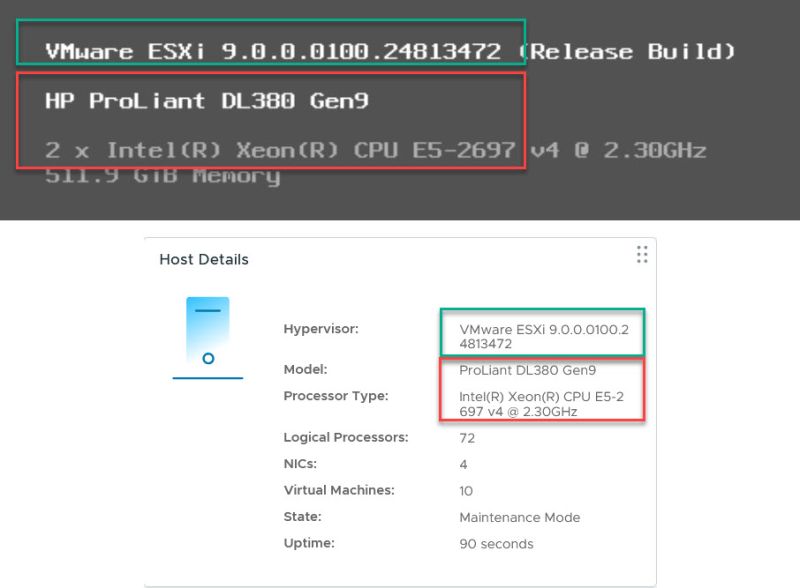

Then the next step was making sure vLCM is able to do an upgrade of the physical host. Since this host is relatively old (but it was a lot cheaper than buying a host that would support VCF 9), we have to make some adjustments.

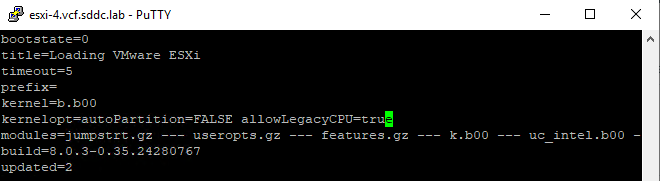

For this, I changed the /bootbank/boot.cfg file and added allowLegacyCPU=true to the kernel boot options, as is described in this blog post from William Lam: https://williamlam.com/2022/10/quick-tip-automating-esxi-8-0-install-using-allowlegacycputrue.html.

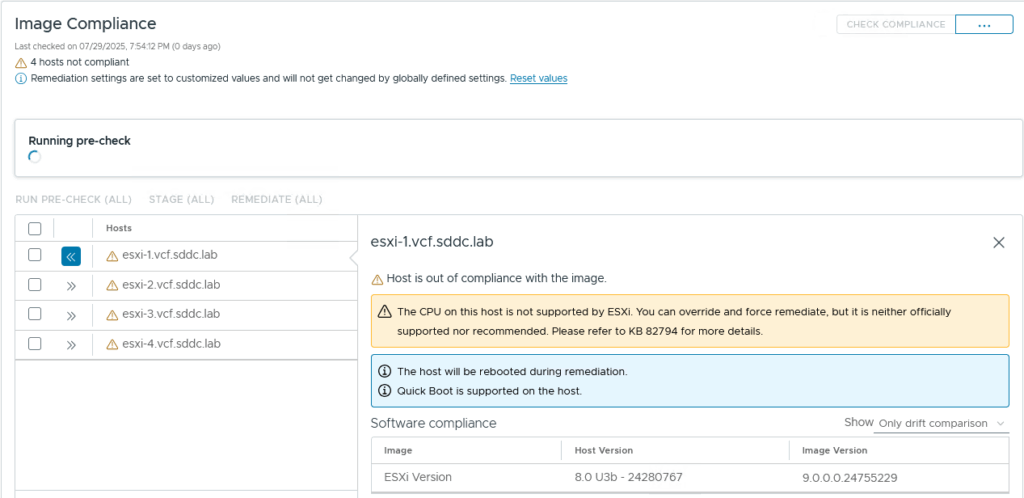

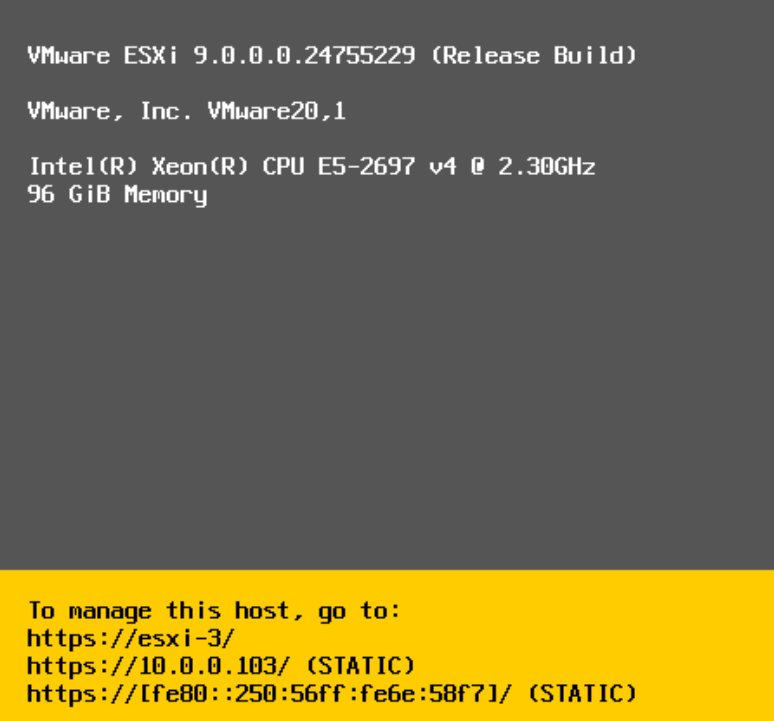

After I changed that, vLCM allows me to upgrade to ESXi 9, even though the processor is an E5-2697 v4, which is not supported for ESXi 9:

Upgrade SDDC Manager

Then the question, does this also work for VCF? Well, in a nutshell, yes. So what I did was change the same file on the four nested ESXi hosts, I have in my Management Domain:

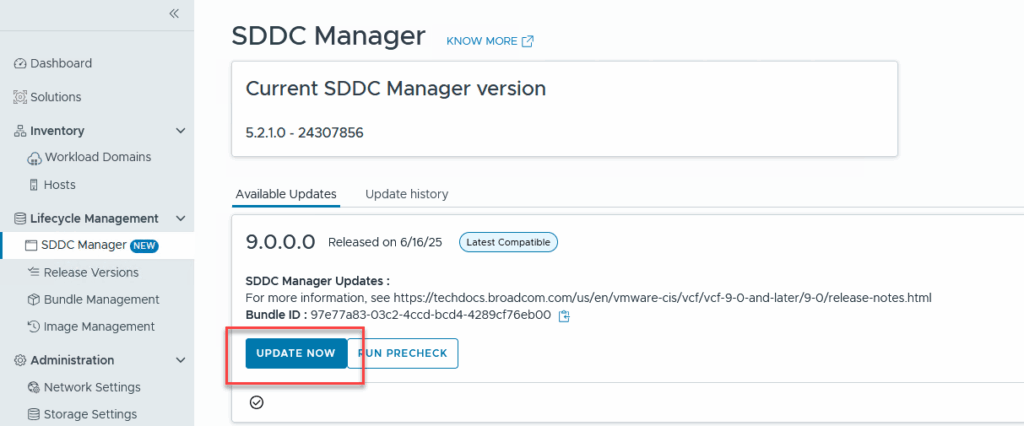

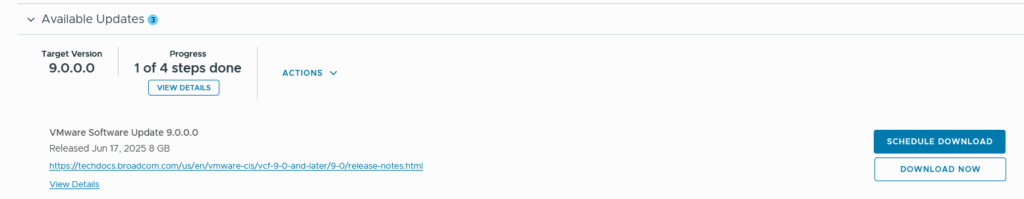

But the actual upgrade of the hosts is the last step in the process, so first we update the SDDC Manager. I don’t have a picture to download the Bundle for the SDDC Manager version 9, but that is around at the same place as where the “Update Now” button will be, after the download has succeeded (see the picture below)

I also had some other issues, I needed to resolve, because of old and expired passwords (the lab had been powered down for a while) and because of an error with the vSAN HCL file in the SDDC Manager, but that was easily resolved, with the help of: https://stephanmctighe.com/2025/03/13/vsan-hcl-db-out-of-date-how-to-update-via-sddc-manager/.

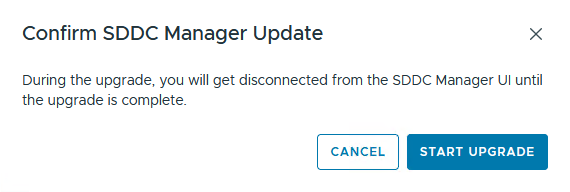

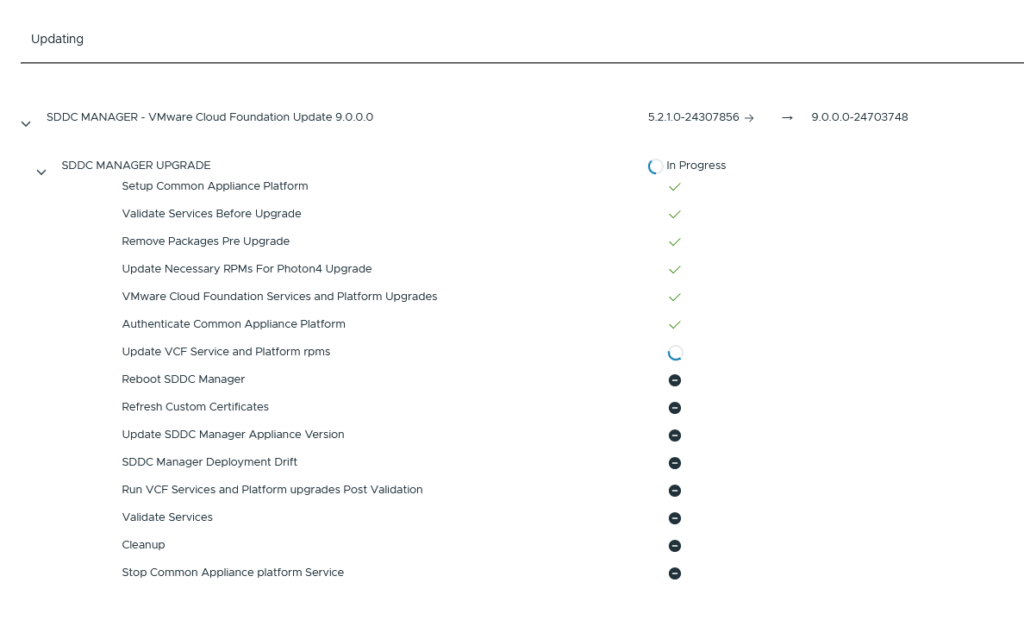

After that was both done, the SDDC Manager can be upgraded (of course, I have made a snapshot first):

And that gets us underway:

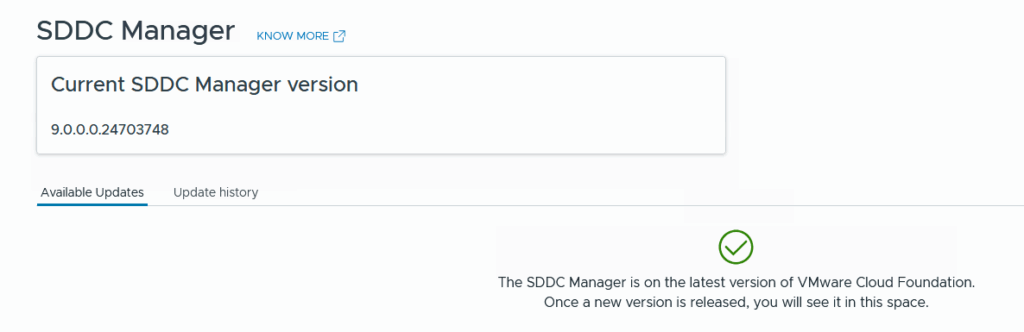

And after a while, the login screen arrives again. After logging in, we see that SDDC Manager is upgraded to version 9:

Upgrade Management Domain

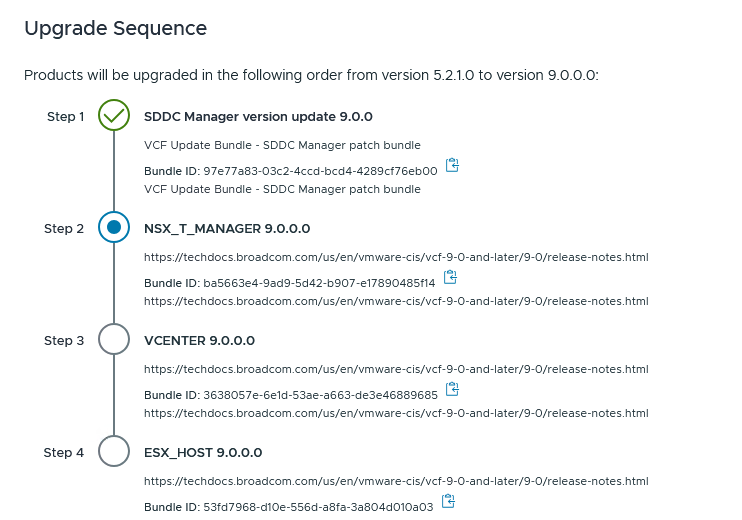

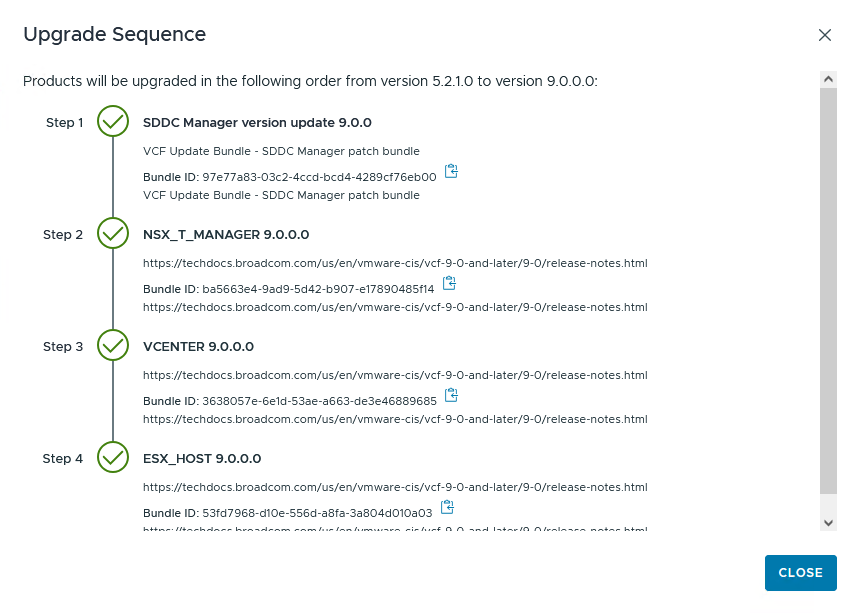

This is where the fun begins. The order that VCF takes, is as follows:

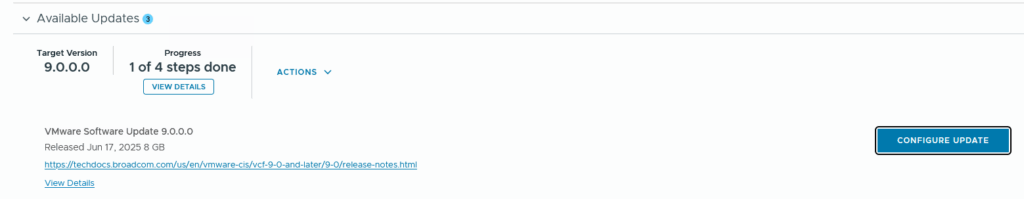

So the first step is to download the software for NSX:

After the successful download en validation, I can begin with the upgrade of NSX.

First a precheck:

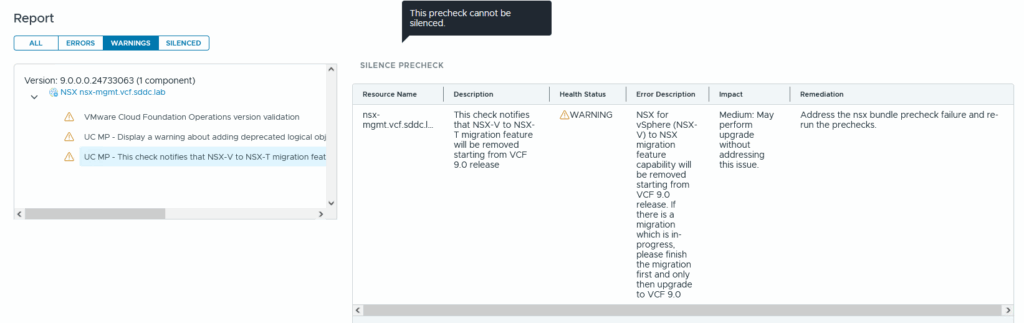

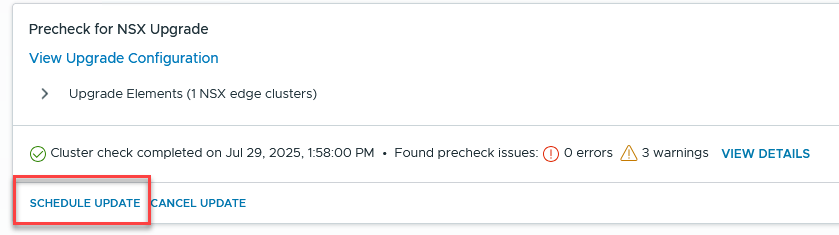

And after the precheck is done (which does take quite a while), we see a couple of (non-blocking) warnings:

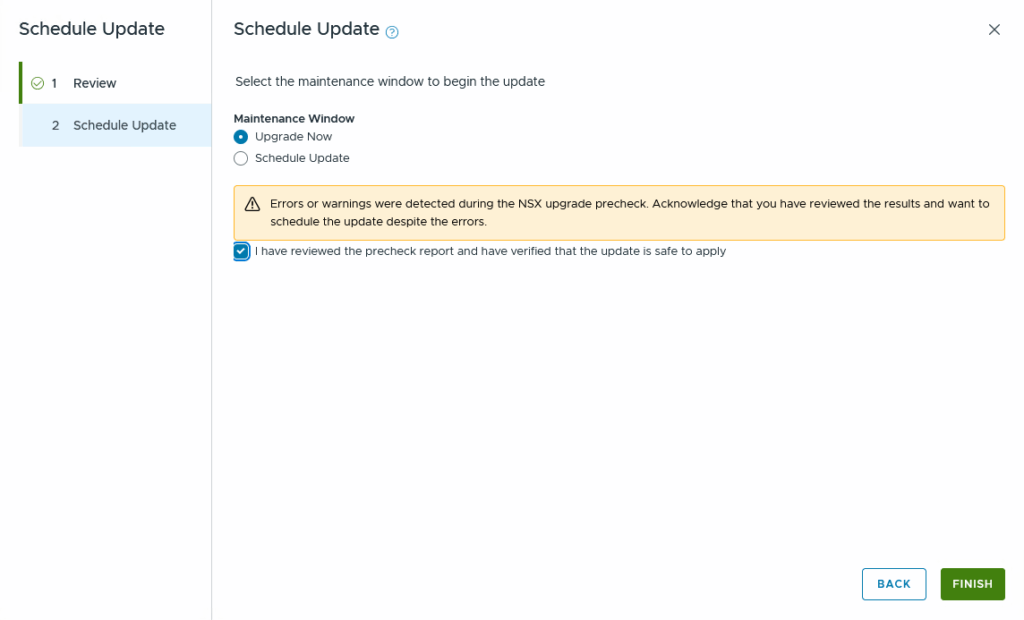

But we can go through with the upgrade itself:

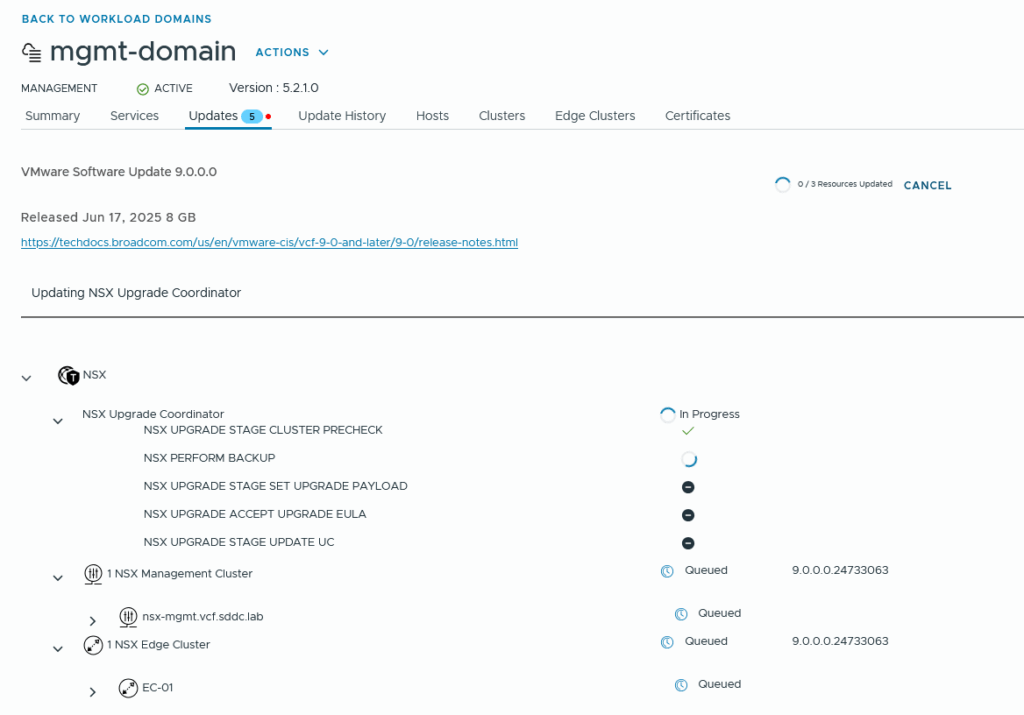

And there she goes:

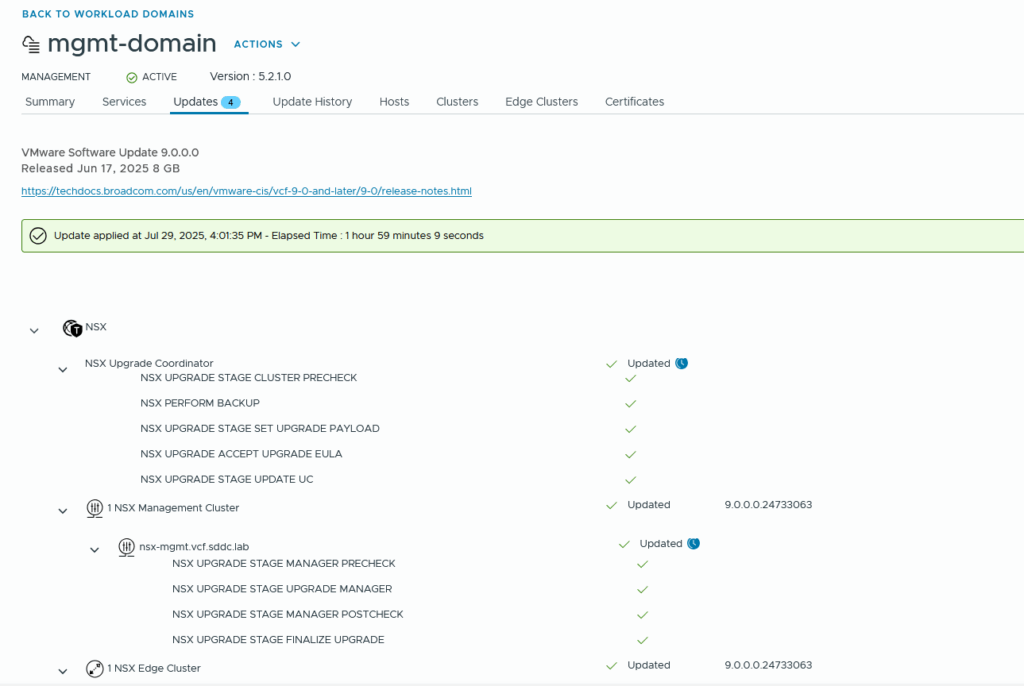

And after almost two hours:

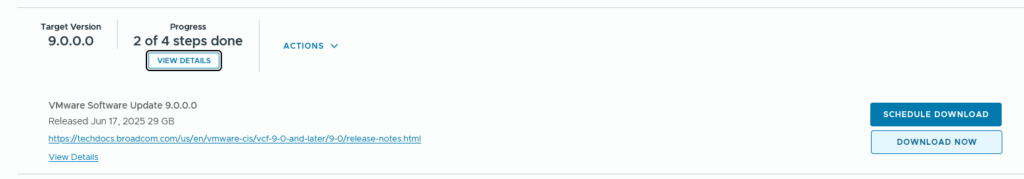

Next step: vCenter Server:

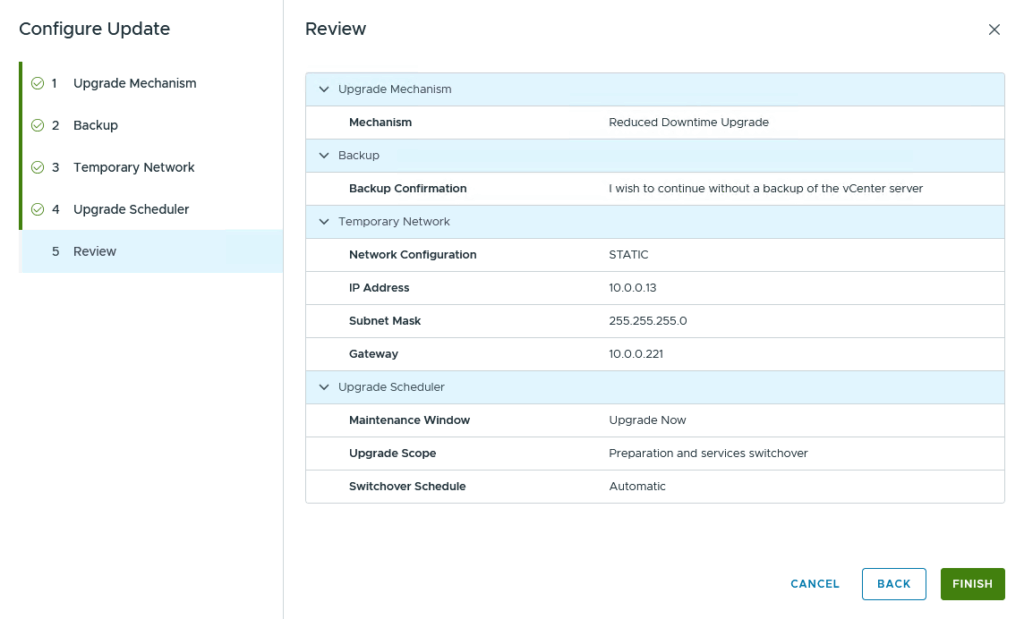

After the download, we can Configure the Upgrade:

It’s pretty straightforward.

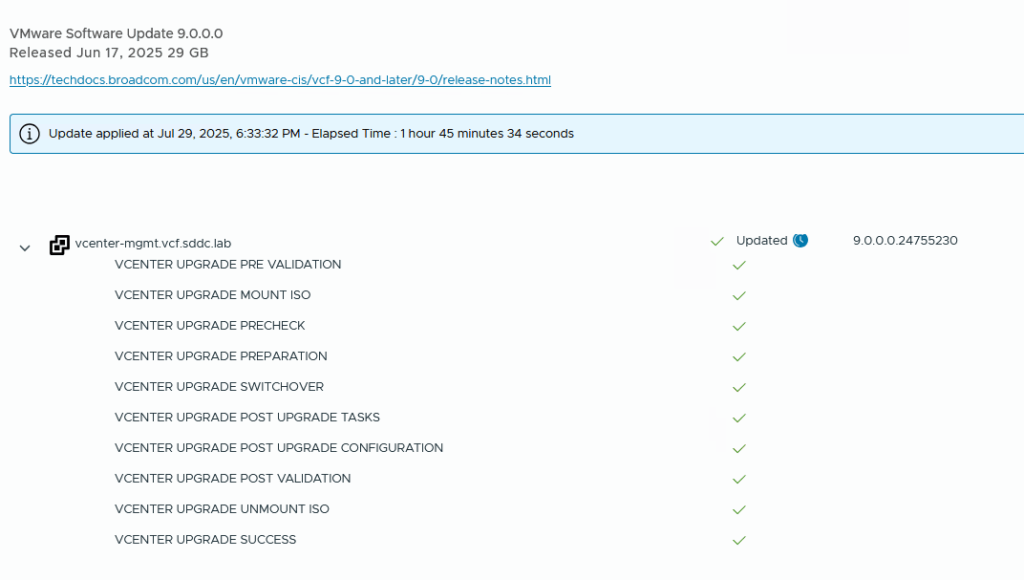

This one takes about an hour and 3 quarters, for my lab environment:

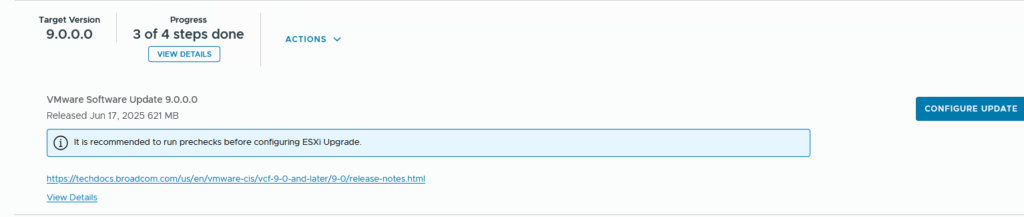

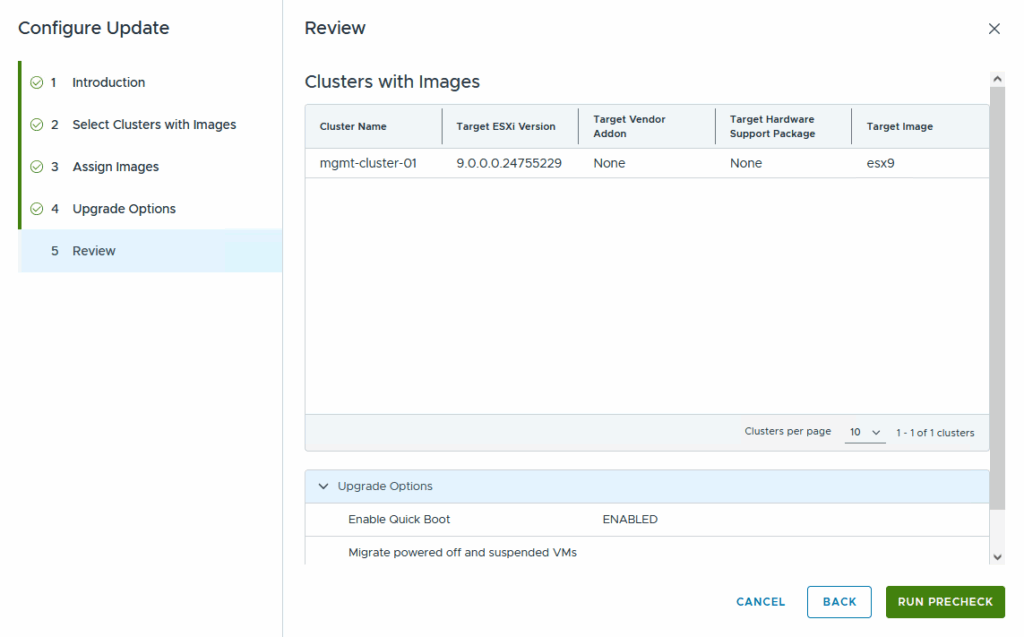

That leaves the last step for this upgrade, the hosts. And that is the most tricky bit, with a non-supported processor. I already did the download, so we can dive directly into the configuration of the upgrade:

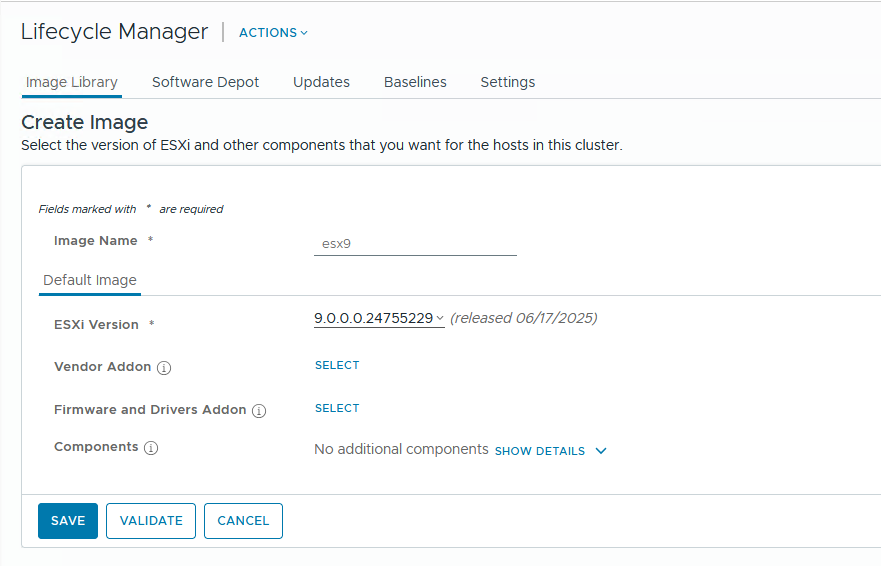

We do need to configure an image that will be assigned to the hosts. This image will consist of ESXi 9. We will create this image on the vCenter Server first. In our case, the image will contain just the ESX version, nothing else.

But before I could create an image, I had some trouble with downloading the new (ESX9) images. I ran into the issue that my updates wouldn’t sync. This had to do with duplicate entries in my VUM database and I received errors like:

error vmware-vum-server[58900] [Originator@6876 sub=Default] [VdbStatement] SQLError was thrown: "ODBC error: (23505) - ERROR: duplicate key value violates unique constraint "uk_vci_updates"^M--> DETAIL: Key (meta_uid)=(ESXi70U1-16850804) already exists.

and

error vmware-vum-server[63160] [Originator@6876 sub=Default] [VdbStatement] SQLError was thrown: "ODBC error: (23505) - ERROR: duplicate key value violates unique constraint "uk_vci_updates"^M--> DETAIL: Key (meta_uid)=(Broadcom-ELX-lpfc_900.14.4.390.14-36vmw.900.0.24755229) already exists.;

in /var/log/vmware/vmware-updatemgr/vum-server/vmware-vum-server.log.

The issue is described here: https://knowledge.broadcom.com/external/article?articleNumber=402037 including the changes that need to be made to resolve it.

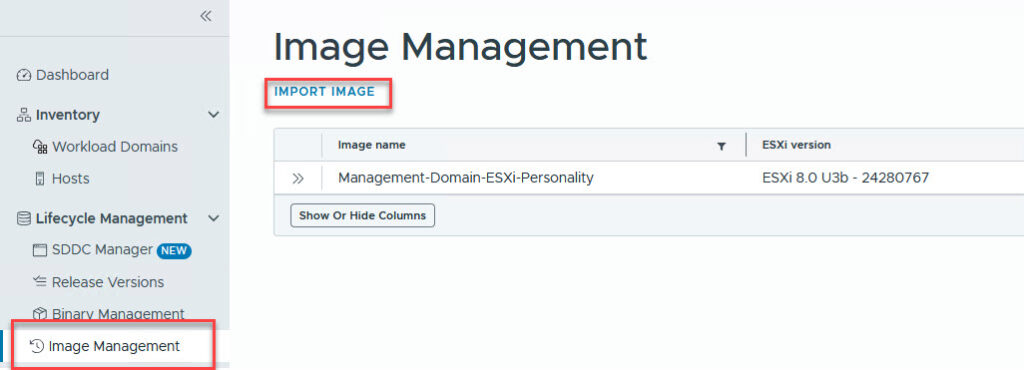

After following the instruction to resolve the issue, I was able sync updates and create a new image, based on the right ESXi version:

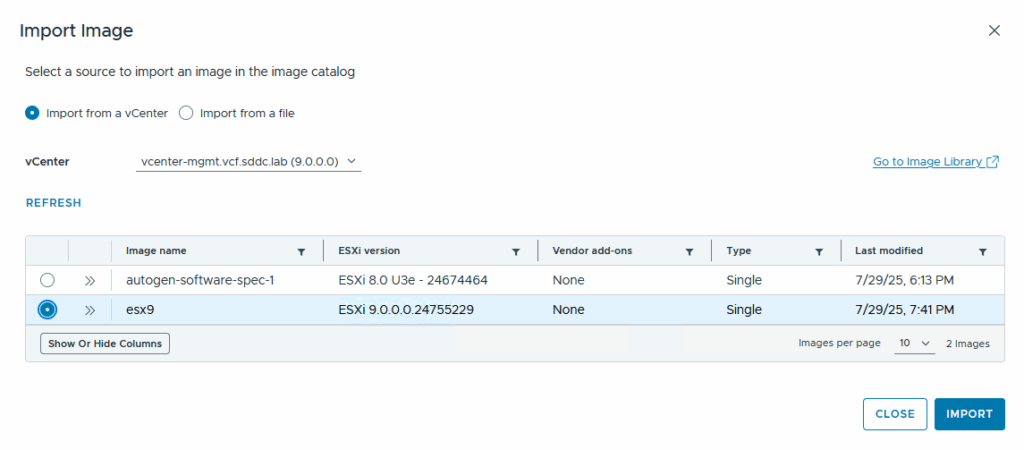

This is then validated and saved and can be imported into SDDC Manager here:

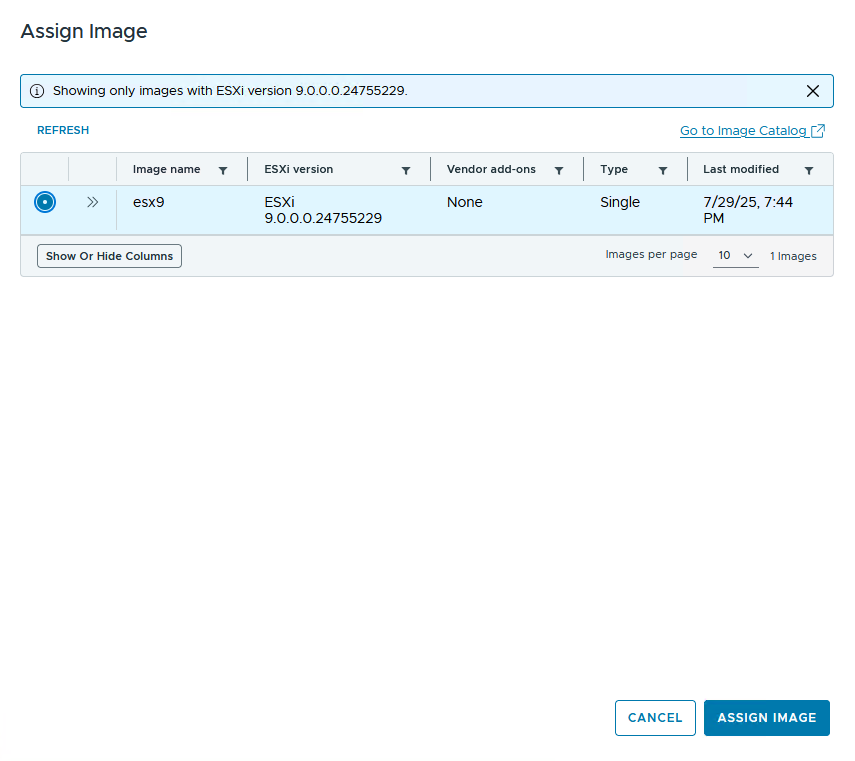

And then it is available in the upgrade configuration of the Management Cluster, to be assigned to my cluster:

And after the configuration is complete, we can run the precheck.

We can follow this precheck in vCenter Server as well:

The warning is expected, the change I described in the beginning of the article (changing the boot.cfg) should cover that.

The end result of the precheck in SDDC Manager:

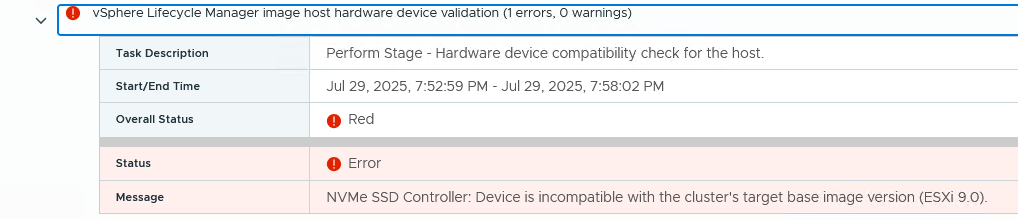

The errors are as follows:

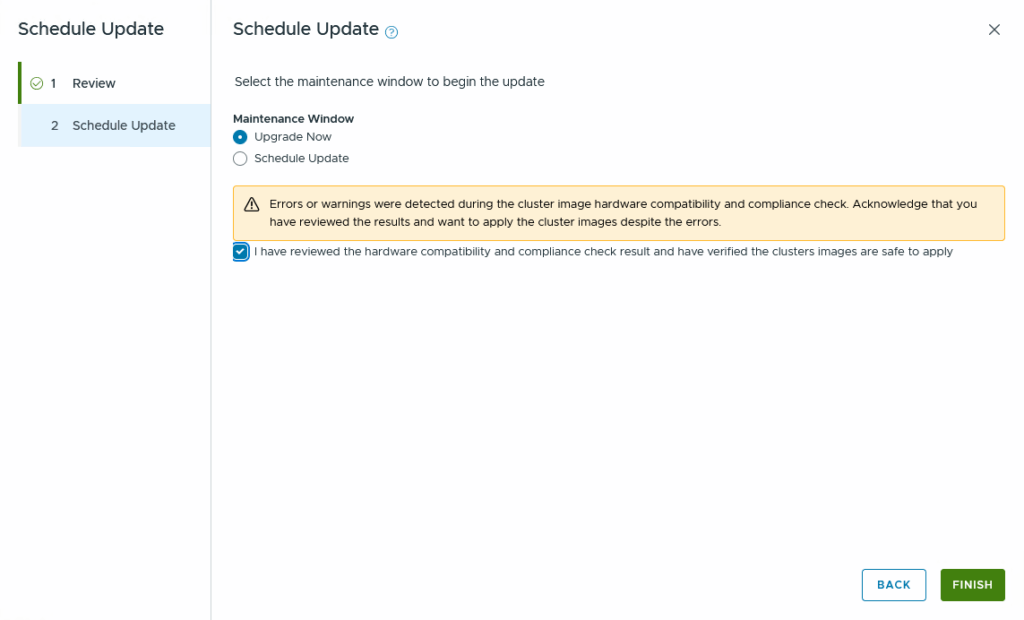

For all four hosts. This does not block us from continuing, so we soldier on:

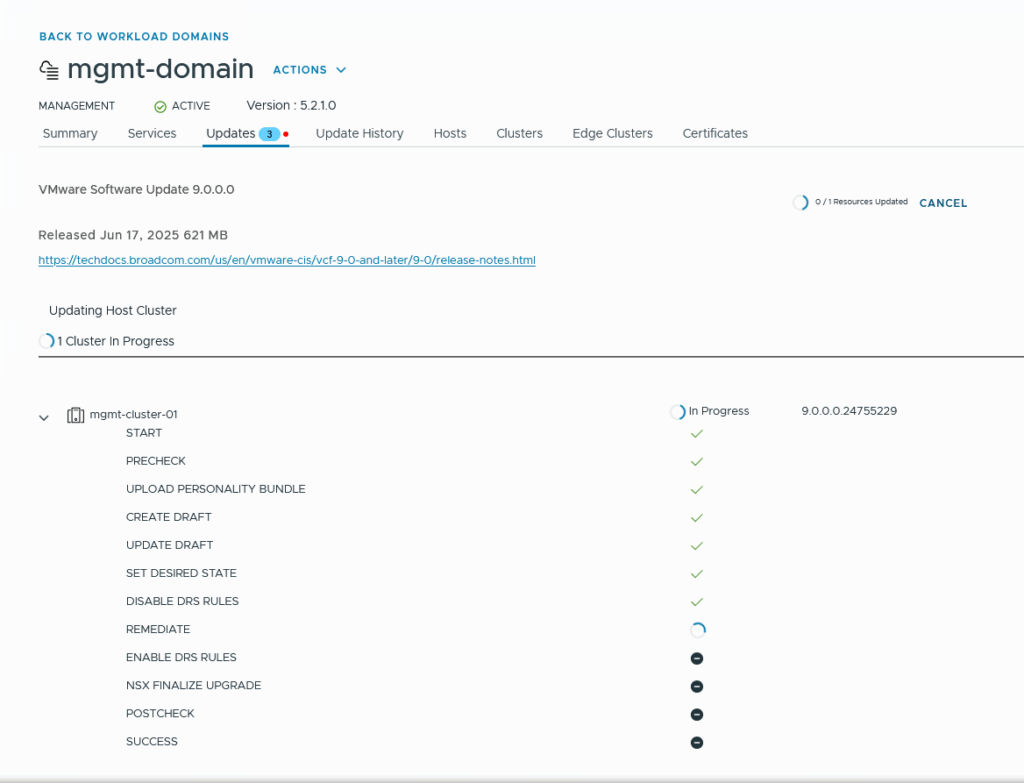

So, there she goes:

And of course we can follow this in vCenter Server ánd by looking at the console of the nested hosts:

and after putting the host in Maintenance Mode (and evacuating vSAN, which is configured with an FTT=0 ;)). the actual upgrade is pretty fast:

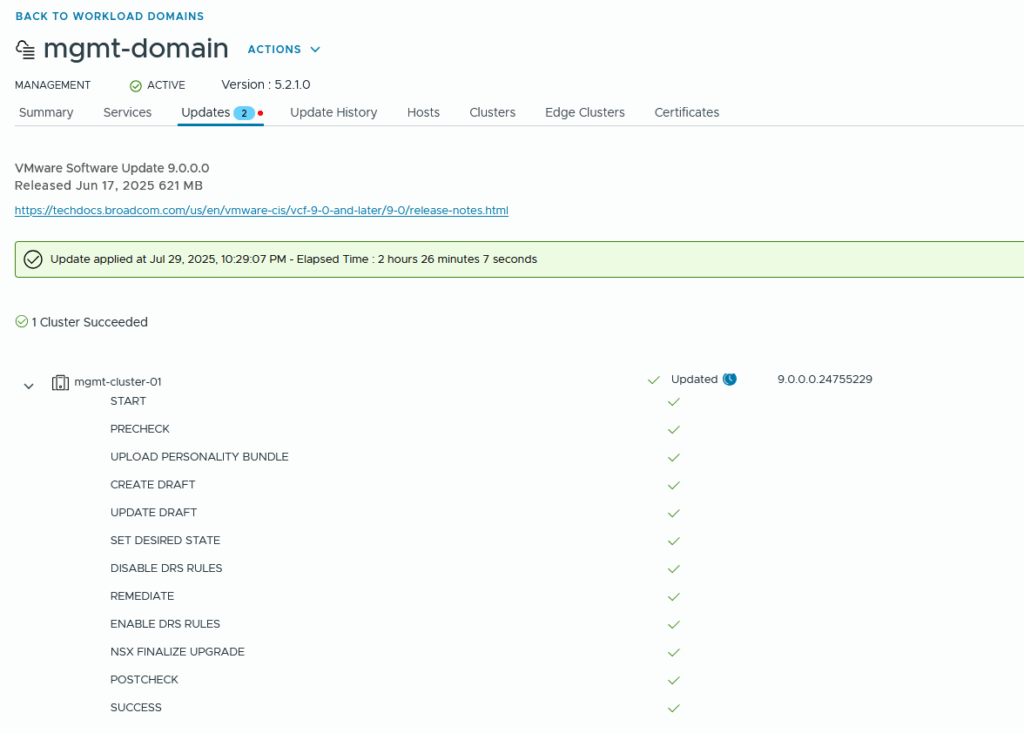

And after almost 2,5 hours, everything is updated:

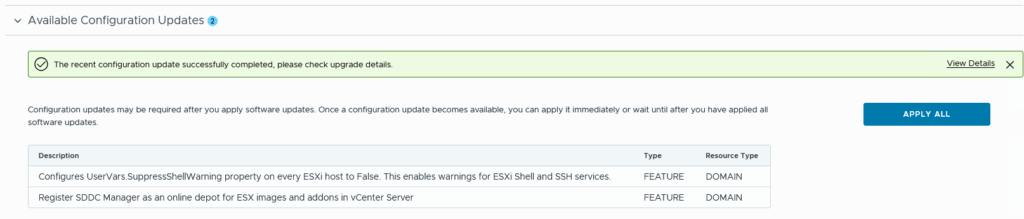

Two more steps to take. First, we apply the configuration changes:

which takes about 15 seconds and leads to:

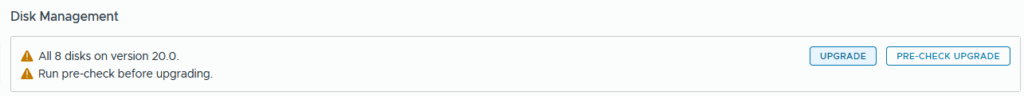

Finally, we upgrade the vSAN Disk Format:

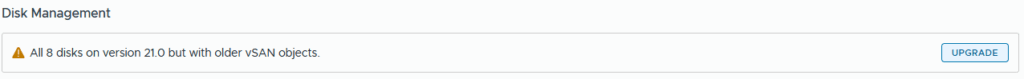

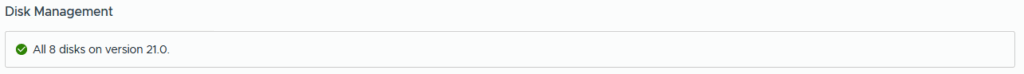

And after that, the vSAN objects:

And that concludes the upgrade. One little issue I have now… I don’t have the licenses yet, so I am running on a 90 days trial.