Expand root volume for AHV host

Fist of all, a disclaimer. This is not the supported method to handle this. If you run into this issue, open a support case and have Nutanix Support help you remediate the issue.

Second discaimer, this was not the solution for the problem I faced. I am still looking into that.

For my environment, I am running Nutanix CE on a nested host in a non-production environment, so I didn’t want to bother support and this was a learning opportunity for me :).

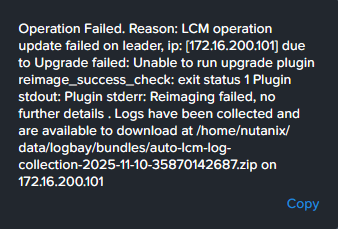

All that being said, when upgrading from AHV 10.0.01 to 10.3.x I ran into an issue where the upgrade failed. The message I got from LCM was:

The part that is important here is: “Unable to run upgrade plugin reimage_success_check: exit status 1 Plugin stdout: Plugin stderr: Reimaging failed“.

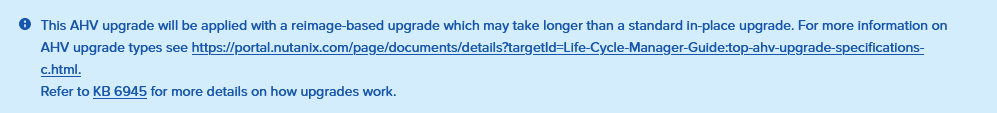

This indicated to me that a reimaging was taking place, instead of an in-place upgrade (which is also shown in LCM:

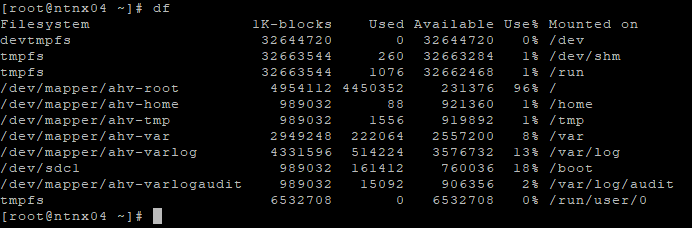

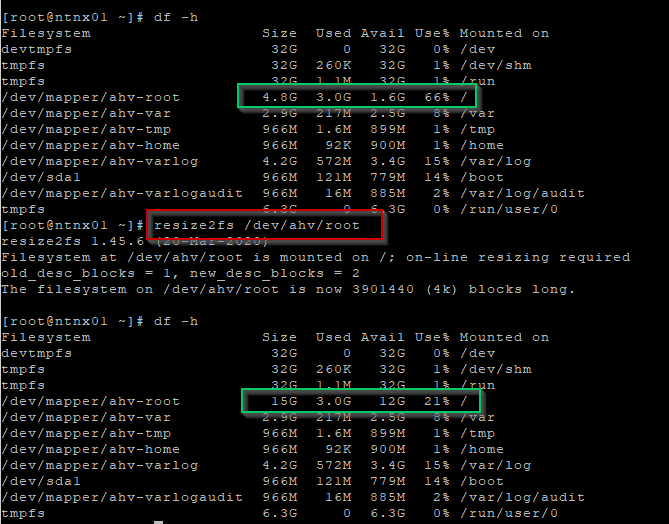

). But in order to do this reimaging, there should be enough disk space to do this and I suspected that that was not the case. If I ran a “df” on my host, it showed that there was not a lot of fee space available in my root volume:

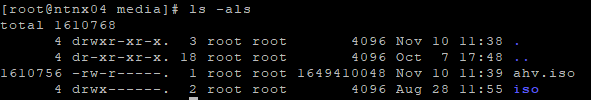

This is after the failure. So I looked for large files, and found one, the file that is used for the upgrade:

I removed the file and that cleared up some space, but I suspected more space might be needed, so I decided to expand the root volume for the AHV host. And again, don’t do this in production environments.

Very first step was expanding the disk of the VM. I grew it from 16 to 26 GB, which would give me 10 GB extra space to add to the root volume. Then I created a shapshot (the other way around is not possible, the expansion of a disk for a snapshotted VM is not supported).

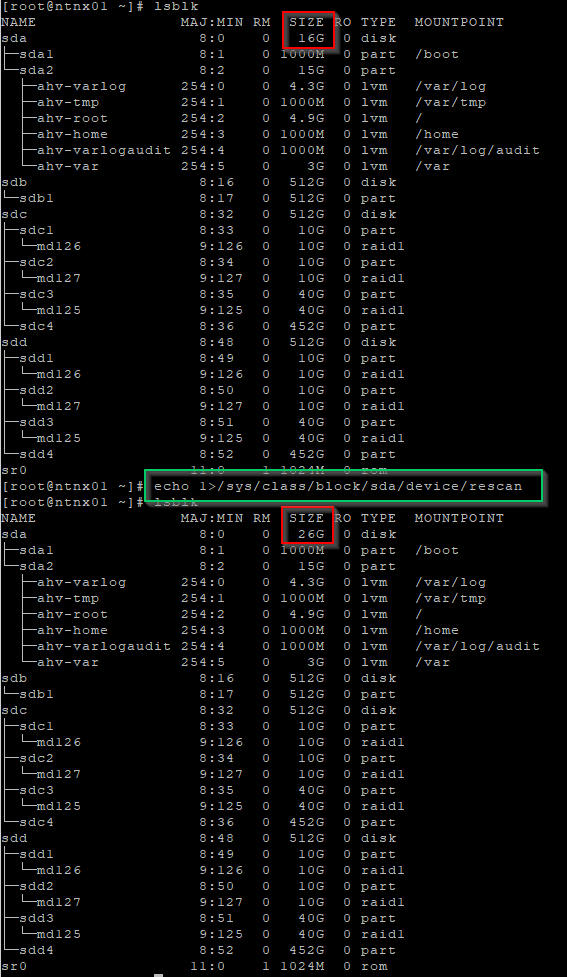

After I did a rescan to have the VM also detect that there was now a larger disk available, I saw that the /dev/sda device had grown from 16 to 26 GB (as what was configured on the VM).

Command to do this is (dependend on the location of your device): echo 1>/sys/class/block/sda/device/rescan

I can see the sda disk being 26 GB large, with 10 GB not in use. So I want to add this 10 GB to sda2, where my root volume lives as a logical volume.

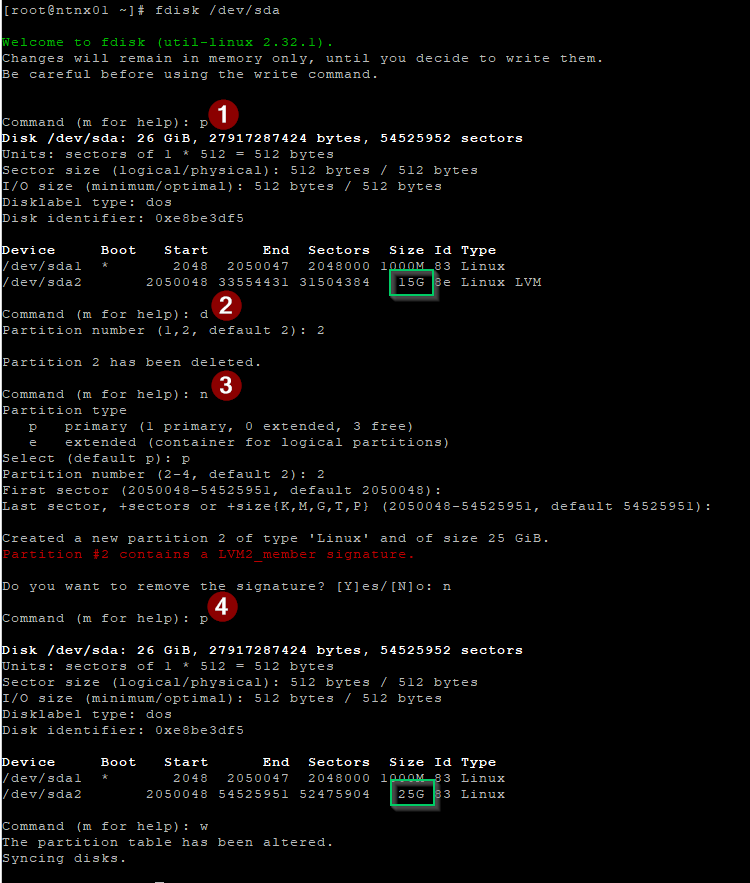

The first step I have to take to get this done, is change the partition table. Note that this is a risky activity, so be careful. I have used https://next.nutanix.com/discussion-forum-14/can-t-upgrade-ce-because-of-host-disk-usage-check-38592?tid=38592&fid=14 to help me with the right things to do.

We do the following steps:

Note, when recreating the partition, I used all the defaults. I did not remove the signature

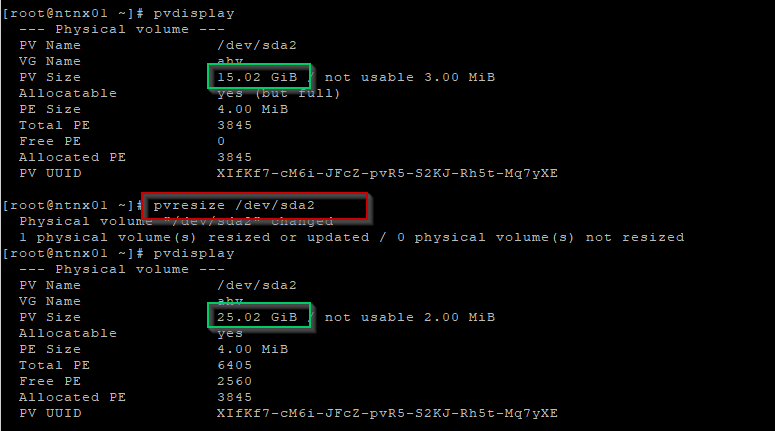

When that is done, the next step is to resize the “physical volume” on which the root volume is located:

Command to do this is: pvresize /dev/sda2

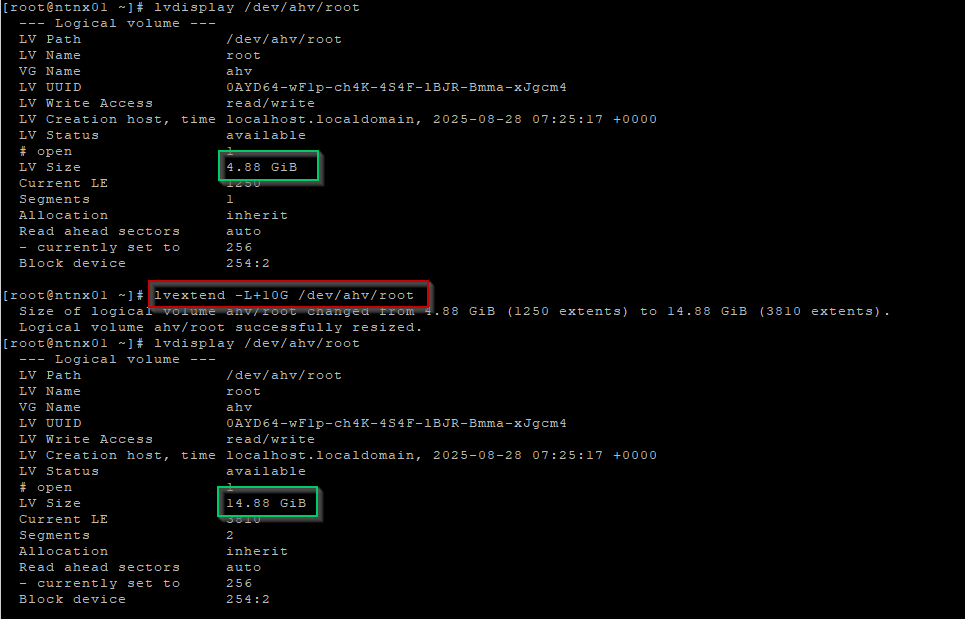

The net step is to extend the partition to which the root volume is bound:

Command to do this is: lvextend -L+10G /dev/ahv/root

And finally we resize the root volume itself:

Command to do this is: resize2fs /dev/ahv/root

After this is all done, I can remove the snapshot and move on to the next host. When all 4 hosts in the first cluster are done, we can retry the upgrade. And have it fail again… So apparently it was not the disk size, but now we know for sure and we can look for another cause.

First “next step” I did was upgrade to the latest version in the 10.0 branch, being 10.0.1.3 (from 10.0.1.1). After that I retried the upgrade to 10.3.0.2 and have it failed again. I did grab a screenshot of the host that was being updated. It has added a boot option

So another cause, for another day, but expanding the root volume was still a good thing to do :).

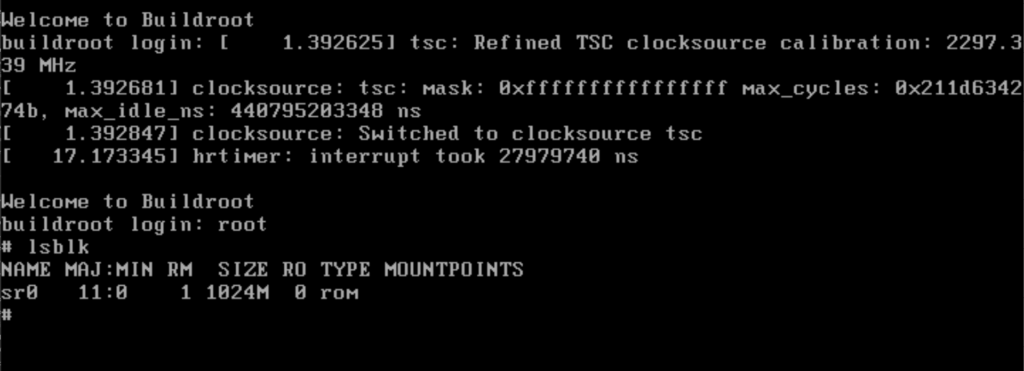

I did find out what the actual cause was, although I don’t have a solution yet. The problem is that the disk is not visible when the VM boots in the “reimage”:

With a little help from a colleague, I was able to boot the host in the “reimage” mode, with a command line available to me, and saw that the disks were not available. This is very likely related to the nested topology. I will write a separate blog if and when I find a solution for this.